Years ago my brother and I took a trip to Vegas. Wanted time together, wanted time away from all the normal life stuff, and probably more than anything just wanted to gamble a little.

We had a pretty good night our first night there. We were both up a pretty significant amount when we came across a roulette table that – at first – excited us. And then taught us some lessons.

For those who haven’t spent time in casinos, most roulette tables have a digital sign by them that displays the last 10-12 spins. Ten spins ago was 23 red. Nine spins ago was 14 black. Eight was ‘00’ green, etc… The table we stumbled onto had a glowing list of 12 red numbers. 12 in a row! Red! In a game that is roughly 50/50 between red and black, it felt like Homeric sirens calling out to us. ‘The next spin had to be black! Bet!!!! The next spin has to be black…!’

I’ll leave it to my (now) fellow probability nerds out there to laugh and explain why we were so wrong – and why we left that night barely breaking even. And yes, the sting stays with me. But my greater point is this:

Numbers are hard.

I don’t just mean basic math (although, if I’m honest, someone asked me how old I was the other day and I really struggled to calculate my age). I’m talking about interpreting numbers. Data. Statistics. Understanding the data that we’re presented with. And maybe most importantly, making decisions based on that data.

You could write books about the ways people fail in their use of data (Narrator’s voice: In fact, many, many people already did write those books.). I’m not gonna write a book – I’m nowhere near qualified to do that. I do, however, want to call out some of the key mistakes I think businesses make in gathering, evaluating, and acting on data. We’ve never been better equipped to accumulate it. We have spreadsheets and multi-terabyte databases and statistical analysis tools and data scientists…and yet…

Survivorship Bias

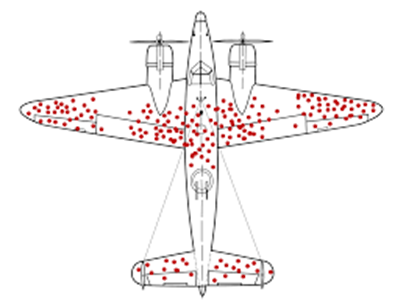

It’s not often you get a really cool picture to perfectly illustrate a complex data problem, but here we are:

The Allies, in WWII, conducted a study of aircraft returning to bases after bombing runs. The image you see here is the result of their study. You can see all the holes that were punched in returning aircraft. They immediately knew where to fortify the planes.

Except they didn’t.

Abraham Wald, a person roughly none of us have ever heard of, was a Hungarian mathematician at the time. He took a look at this study and said (I’m paraphrasing here…): “Hey. Dumbasses. These are the planes that made it back. Sure – strengthen those parts of the planes if you want. But this study doesn’t show a single thing about the planes that didn’t make it back.”

What does this mean for your business? We tend to focus on the things we can measure (foreshadowing, btw). We can measure customer satisfaction. What we often forget to measure is ex-customer reasoning. Why did they leave? What broke the proverbial camel’s back? Is the customer who stayed upset about a seven-minute wait on hold while the one who left didn’t like the script that was read to them by the CS rep? Everyone knows the costs related to acquiring a customer versus retaining one – so why isn’t our focus on the ones who left?

This isn’t an argument to throw out standard ‘customer satisfaction’ measurement. Just a reminder that your most expensive cost isn’t a mildly annoyed customer – it’s a customer who’s gone.

The McNamara Fallacy

This is the foreshadowing I referred to earlier…

(As a side note, I’ve often heard it said that most advances in our human understanding come through the military, space programs, or porn. Rest assured there will be exactly zero porn references in this post. You might get a fair amount of military, though.)

I’m not quite old enough to have vivid memories of the Viet Nam war. I am old enough to have vague memories of Walter Cronkite on TV every night talking about it. And I definitely grew up hearing the name Robert McNamara. (Another side note – if you have any interest in history – especially ‘recent’ American history, do yourself a favor and read The Best and the Brightest).

Turns out there was a deep belief in the early/mid 1960s that numbers would/could save us. Think RAND Corporation. If we could only quantify, we could solve. Guess where Robert McNamara worked.

So skip ahead about 5-6 years. The war in southeast Asia isn’t going great. Body counts are mounting – sounds great. ‘Hilltops won’? Great stat (they are subsequently lost, but that’s a different issue). And then skip ahead 5-6 years more. It’s over. Not a great ending.

And here’s where poor Mister (sorry, Defense Secretary) McNamara gets this particular logical fallacy permanently attached to his name. The McNamara fallacy can generally be stated as:

- Measure whatever can be easily measured.

- Disregard that which cannot be measured easily.

- Presume that which cannot be measured easily is not important.

- Presume that which cannot be measured easily does not exist.

McNamara could count the bodies, but he couldn’t quantify (what most historians seem to be coalescing around at this point, forty years on…) the will of the North Vietnamese people.

Business leaders have more data than they’ve ever had in the history of the world. At their fingertips. Whenever they want it. But the question has to be asked – every day, at every moment – critically…

What non-quantifiable factors am I missing?

Goodhart’s Law

This may be my favorite. It touches, in a way, on every other data fallacy. Goodhart’s Law is pretty simple:

When a measure becomes a target, it ceases to be a good metric.

I also love it because it’s a really difficult problem to solve. Take an often-cited example (which has nothing to do with the military, space exploration, or porn…):

At some point during the British rule of India, they decided there were too many deadly cobras hanging around. They came up with a *cough, cough* brilliant solution: offer a bounty for every cobra skin turned in to the colonial government. Yeah…so…you’ll be shocked to know that the native Indian population figured out that if you raised cobras and turned in the skins you could make lots of money.

And the point is this: once you establish a metric, people will figure that metric out, adapt their behavior, game the system, and before you know it – the metric is meaningless.

So Give Up?

Either Mark Twain or Benjamin Disraeli is responsible for the quote “Lies, Damned Lies, and Statistics.” It’s as brilliant as it is opaque. Data is important – critical. Our current ability to gather and analyze it provides insights that were impossible even twenty years ago. And yet there are roughly two dozen data fallacies that I thought about including in this article. It’s almost too easy. I think the moral is that we put our sole and primary faith in data at our own risk.

No amount of information, or analysis, or data science will give us all the answers. Whatever we learn from today’s data has to be tempered and adjusted by what we learn tomorrow. What we know about the world. By the possibilities we haven’t even considered yet.

We have to constantly reevaluate what we’re measuring, why we’re measuring it, and what we think it’s telling us.

My brother and I had data. We thought the implications of that data were clear.

We ate at the half-priced buffet instead of L’Atelier.

Don’t be me and my brother.